Arxiv 2023

Ins-HOI: Instance Aware Human-Object Interactions Recovery

Jiajun Zhang1, Yuxiang Zhang2, Hongwen Zhang3, Boyao Zhou2, Ruizhi Shao2, Zonghai Hu 1, Yebin Liu2

1Beijing University of Posts and Telecommunications 2 Tsinghua University 3 Beijing Normal University

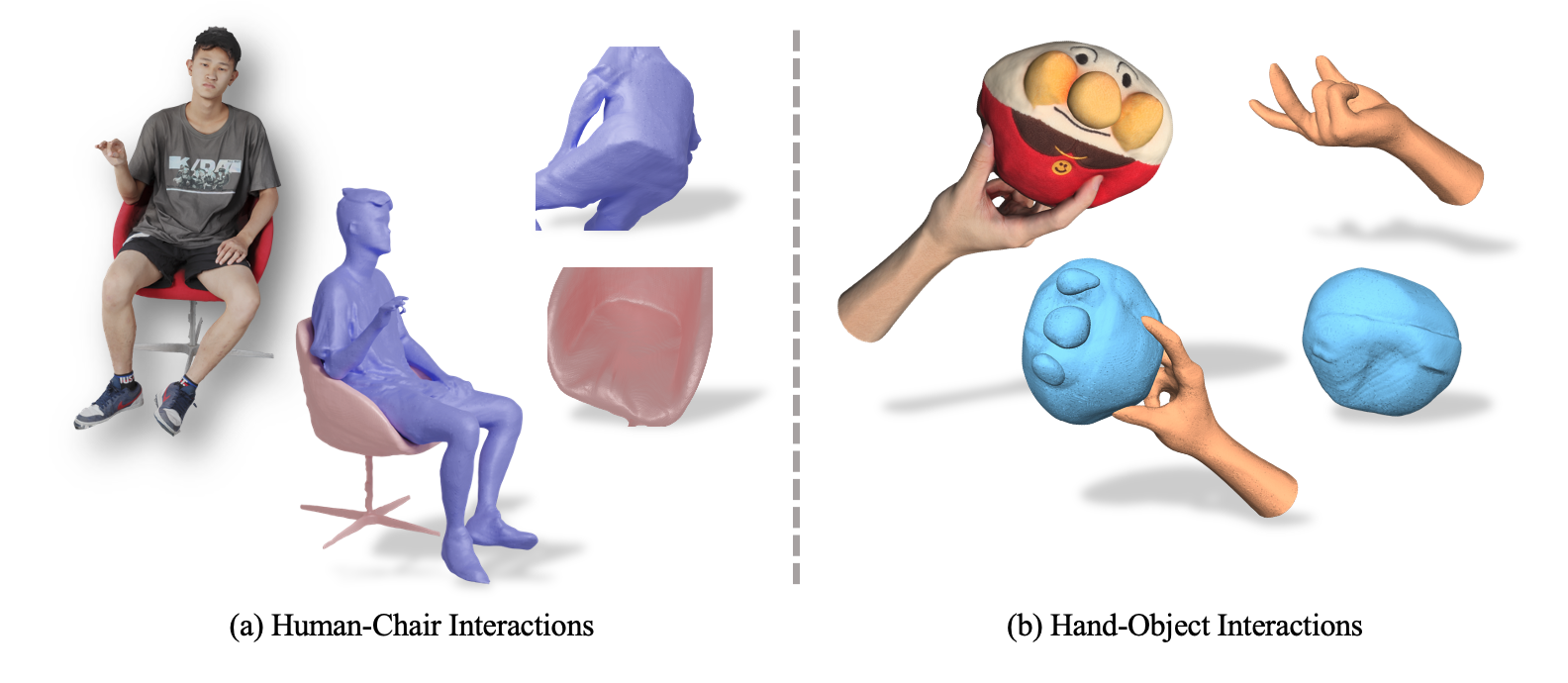

We present Ins-HOI, a novel method for instance-level humans/hands and objects interaction recovery. Ins-HOI is an implicit-based approach achieves accurate reconstruction of individual geometry and non-visible contact areas.

Abstract

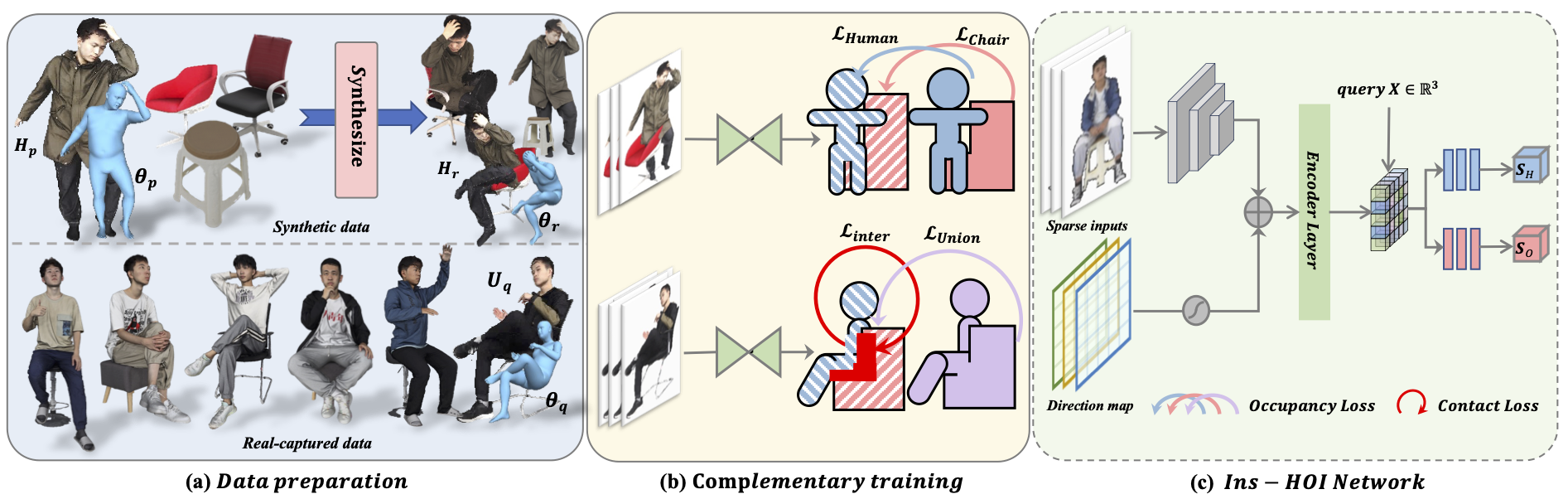

Recovering detailed interactions between humans/hands and objects is an appealing yet challenging task. Existing methods typically use template-based representations to track human/hand and objects in interactions. Despite the progress, they fail to handle the invisible contact surfaces. In this paper, we propose Ins-HOI, an end-to-end solution to recover human/hand-object reconstruction via instance-level implicit reconstruction. To this end, we introduce an instance-level occupancy field to support simultaneous human/hand and object representation, and a complementary training strategy to handle the lack of instance-level ground truths. Such a representation enables learning a contact prior implicitly from sparse observations. During the complementary training, we augment the real-captured data with synthesized data by randomly composing individual scans of humans/hands and objects and intentionally allowing for penetration. In this way, our network learns to recover individual shapes as completely as possible from the synthesized data, while being aware of the contact constraints and overall reasonability based on real-captured scans. As demonstrated in experiments, our method Ins-HOI can produce reasonable and realistic non-visible contact surfaces even in cases of extremely close interaction. To facilitate the research of this task, we collect a large-scale, high-fidelity 3D scan dataset, including 5.2k high-quality scans with real-world human-chair and hand-object interactions. We will release our dataset and source codes.

Overview

Overview of the method: (a) showcases the synthetic data augmentation process using THuman and Ins-Sit dataset to form a training dataset. (b) highlights how the training components provide unique guidance for complementary learning (blue and pink denote the human and chair meshes; purple and red indicate the union and intersection). (c) depicts our benchmark Ins-HOI, which given sparse view inputs to produce instance-level human-object recovery via an end-to-end approach.

THuman-Sit and THuman-Grasp dataset

Ins-Sit dataset comprises 4700 scans, involving 72 subjects (12 females and 60 males). Each subject seated on 2 of 11 distinct chair types and perform 60 unique poses.

Ins-Grasp dataset comprises 500 scans, involving 50 object types, each involved 10 distinct interactions.

Demo Video

Real-World Dynamic Demo

1 of 6 input views

Supplementary Results

THuman-Sit data Ins-HOI Results Human Part Chair Part

THuman-Grasp data Ins-HOI Results Hand Part Object Part

Citation

@article{zhang2023inshoi,

title={Ins-HOI: Instance Aware Human-Object Interactions Recovery},

author={Zhang, Jiajun and Zhang, Yuxiang and Zhang, Hongwen and Zhou, Boyao and Shao, Ruizhi and Hu, Zonghai and Liu, Yebin},

booktitle={arXiv preprint arXiv:2312.09641},

year={2023}

}